Creating Participatory Research: Examples from practice

Chapter 1

Chapter 2

Chapter 3

Chapter 4

Chapter 5

Chapter 6

Chapter 8

Chapter 10

Chapter 1

Examples of visual and creative participatory tools that we have used

These example tools use creative approaches to data collection with the aim of encouraging participation beyond answering questions set by researchers. They were used within the context of focus groups and so were combined with a traditional methods. Whilst these aim to encourage participation, they were designed by researchers not those being researched.

Cross, R and Warwick-Booth, L (2015) Using storyboards in participatory research Nurse Researcher 23, 3, pp.8-12

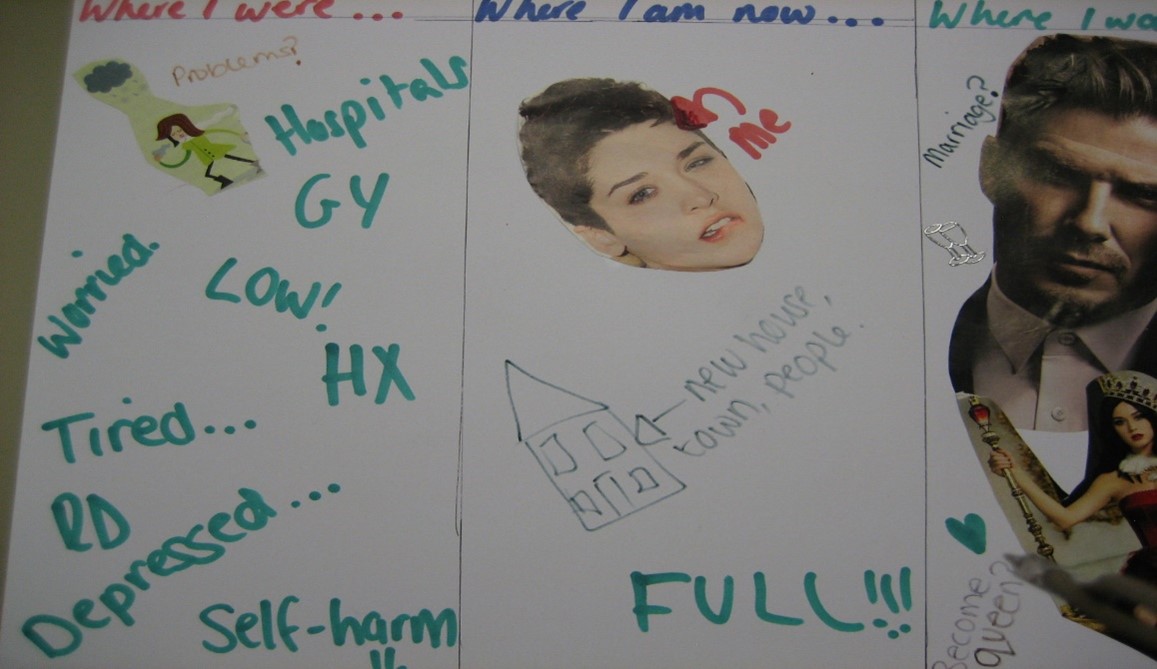

This is an example of using a creative task to engage young women in research more actively by using craft materials and magazines to produce a storyboard of their journey through an intervention, whilst talking together in a focus group. The women were asked to reflect upon where they where at the start of their engagement with the project, where they were at the present time (at the point of the data collection) and where they hoped to be in the future. They produced a board of images and then discussed these with the researchers. See example photo:

In this study we used a metaphor within the data collection approach, again to try and enable people to talk about their experiences in a friendly environment. We asked them to tell us about the support that they were receiving and compare it to the ingredients for a cake. We provided them with recipe ingredients and cookery utensils to support their discussion. The group discussed their ideas, and then produced a list of key ingredients, and ordered them – see example photo:

Chapter 2

Example of coproduction approaches that we have used

| Context | Co-productive approach | Outputs |

| Evaluation of a Pilot Telecare Programme for a local authority (2017-2019) | ‘Share and Learn’ workshops were conducted with professionals involved in designing the pilot, those referring clients and staff supporting them via Telecare provision throughout the evaluation. This approach was used to inform the evaluation design by the researchers. Workshop 1 (October 2017) - feedback on the draft theory of change and sharing of good practice from professionals in relation to referral processes. This resulted in learning for the professionals and referrers alike. Workshop 2 (May 2018) – update on the evaluation progress, andfeedback on the interview schedule that had been designed to garner the views of service users. The interview schedule was adjusted to reflect the feedback provided by professionals. Workshop 3 (October 2018) – report on the early findings from the evaluation and discussion of dissemination approaches. A dissemination strategy was co-produced by the researchers and professionals. | Film created by local authority staff to ‘sell’ the service more broadly (based on feedback from workshop 1). Traditional ‘final’ evaluation report and accompanying executive summary were produced based upon workshop 3 (Warwick-Booth et al 2019). A decision was made by the local authority to discontinue the pilot programme based on research findings. The dissemination strategy was not implemented. |

Chapter 3

Example from our own practice

In the first six months of the three-year Community Wellbeing Evidence Programme for the What Works Centre for Wellbeing, we carried out an extensive ‘Voice of the User’ exercise, involving stakeholder workshops, online surveys and community sounding boards to determine the research priorities for the programme. Participants were asked what they understood by wellbeing and community wellbeing, and what was important to them in terms of community wellbeing. These priorities were grouped into a framework of ‘people’, ‘place’ and ‘power’ and a report was produced setting out the ‘Voice of the User’. Next, scoping reviews of the literature were carried out to determine where evidence already existed and where there were evidence gaps. Finally, more workshops were held with academics and community groups to feed back on the findings of the scoping reviews and to decide on the top three priorities for the research programme to explore.

Chapter 4

Example of an ethical challenge that we have faced and discussion of the resolutionUsing peer researchers to explore experiences of service support related to gender specific complex needs (Warwick-Booth, L and Cross, R. 2018)

As part of a commissioned evaluation project looking at the effectiveness of a gender-specific support service (women-only), a team of us (all female researchers), suggested that we train service users as peer researchers. Our thinking was that the peer researchers would benefit from the training and generate different data compared to what we might gather as professional researchers. We had experience of such a model (peer research) from other evaluation projects, which had worked well. The commissioner (also the service provider) supported this idea, as it would help them to meet some of their targets in terms of the involvement of service users. We gained university ethical approval without any issue. Our ethics application included the following detail, specifically about the peer research approach.

The ethics application:

The evaluation team will train peer evaluators and/or of the service users in interviewing their peers (one-day training). The women are then provided with interview schedules, consent forms, information sheets and Dictaphones to enable them to conduct interviews (one to one) with other service users. The women to be trained would be identified by (service provider) staff, as would the sample of women for interviewing. The evaluation team would be able to provide on-going support to those trained, issue them with a certificate after completing the training. All transcribing and analysis would be done by university staff once the voice files were received. We will ensure careful selection of peer researchers with (service provider) support, and carefully train those who volunteer and support them in doing data collection. The training will cover ethical approaches to research, anonymity and confidentiality etc. to ensure that those working as peer researchers are able to operate within ethical expectations and guidelines.

The reality:

We trained 4 women who had used the service but had exited so they were defined as no longer in need of support and therefore assumed to be less vulnerable, by the service provider. These women were selected by the staff who had been working with them, who approached them and asked if they were interested in the training. They came to our university and attended the training that we had designed. Our training session included an overview of our approach to the evaluation, content on focus groups, discussion of the proposed schedule and the associated ethical requirements. A worker from the service provider was also present as a mechanism to ensure that support was readily available should it be needed during the training session. She was also keen to learn more about research as a novice researcher.

We had initially planned to ask women to interview other service users one to one (as per the ethics application) at the point of exit from the service. However, we had started to interview some women exiting already, and found that the interviews were lengthy, contained many disclosures, were upsetting and some of the women needed referring back to the service provider for more support. So in discussion with the service provider, we decided to use focus groups to collect data from service users, so that one of the evaluation team would be present alongside the peer researcher to support them during data collection, allow flexibility related to their confidence levels, and ensure that risk was managed. Had we not been interviewing the service users before the training, we would not have been aware of the complexities of the one-to-one interviews. Is the official approval process adequate here to ensure lay researchers do not experience harm? Were our safety mechanisms and procedures adequate?

Although the 4 women that we trained were no longer in receipt of the support from the service provider, they still had complex needs and were vulnerable as survivors of domestic violence. One of the women was very emotional during the training despite its general focus and used some of the discussion time to talk about her own experiences. Another suggested that if we went ahead with our approach, this would mean that the peer researchers would be revisiting their own experiences. She pointed out that this may not be comfortable emotionally as she felt that she had positively moved on after exiting the service and did not ‘want to go back’. Therefore, whilst the training was a positive experience and the women were really pleased to attend the university and receive a certificate, conducting research with their peers would be an emotional experience for them. Ethically this raises questions about how to manage emotions, risk and lay researcher well-being. Would the benefits of their participation outweigh the risks? How can this be assessed? Would there be enough time available to support the women during the research process?

More positively, during the training session, the women commented upon the schedule that we planned to use, which had been checked by the service provider and had been approved via the ethical system at the university. However, they all felt that one of the questions needed to be amended when they considered how they would feel being asked it. Therefore, the schedule was subsequently amended.

Following on from the training, and discussion with the service provider, we did not proceed with using the peer researchers to gather data because of the issues raised in terms of emotions, risk and safety. In addition, in trying to manage the safety of peer researchers, we had changed our proposed method to a focus group, which raised further ethical questions. For example, would all service users feel comfortable sharing their experiences of support in a focus group context with other women? Would a focus group approach exclude some women, such as those from the South Asian community who are likely to face additional challenges to participation? We therefore continued to gather data via one to one interviews with women exiting the service ourselves during the evaluation.

Chapter 5

Example from our own practice

Peer research was used as a method of data collection in case studies carried out as part of a mixed methods evaluation of a community empowerment project. The research team contacted local community leaders who acted as gatekeepers in identifying groups of people who were willing and able to be trained as peer researchers. The group then came together to discuss what issues they wished to research. The researcher then joined the group for a two day training workshop in which they worked together with the peer researchers to come to a decision on which research questions to ask and which methods to use to collect the data. Most chose either interviews or a short survey. Research questions varied across sites, but a common theme was the issue of non-participation and barriers to participation in the project for certain groups of people. The peer researchers received training on the chosen methods, both in the workshop and by email and telephone conversations with the researcher to follow up. They then had several weeks to collect the data, with telephone and email support available from the academic researcher. Once the data were collected the researcher returned for another workshop in which training was given on how to analyse the findings, the results were presented and analysed. The academic researcher then wrote up a short report for each site, which was reviewed by the peer researchers before a final copy was sent to the funders.

Chapter 6

Examples of participatory analysis approaches that we have used

Volunteer Listeners Evaluation

As part of a local evaluation of Time to Shine Leeds, a National Lottery funded programme aiming to tackle social isolation amongst the elderly, we were involved in training and supporting older people to become ‘Volunteer Listeners’. Drawing upon an idea from a Volunteer already involved in the project, we worked alongside him to pilot the idea, and then implement it in practice.

The overall aim of the project was to add a very human angle to the impersonal evaluation data gathered nationally for the Big Lottery through questionnaires.

The idea is simple - Conversations (as opposed to formal, structured, interviews) between project participants (referred to as ‘Storytellers) and 'Volunteer Listeners', are held to gather more in-depth views in the form of stories. Storytellers are recruited from partnership organisations (all involved in the delivery of interventions to tackle social isolation) and their individual stories are written up by volunteer ‘Storywriters’. The Volunteer Listeners are tasked with listening and capturing the stories.

Volunteers were provided with training about the evaluation and the Volunteer Listener approach. Volunteers were provided with detailed role descriptions of Listener and Storywriter. Following a small-scale pilot in 2017-2018, the Volunteer Listeners Programme was re-launched in 2019 with the support of the lead voluntary sector organisation, (who had originally secured the Big Lottery grant). They advertised and recruited people interested in becoming Volunteer Listeners and Storywriters. Training sessions were held in February, March and October 2019. Following on from the training, 10 Volunteer Listeners (8 women, 2 men) went onto visit community projects and to hold conversations with older people. The total number of conversations held between June and November 2019, was 26. These were sent into the evaluation team for inclusion in a summary report.

These volunteers collected data, and then analysed it by writing it into a coherent story about an individuals experience. There were varying levels of detail in the stories provided, some were hand-written, others neatly typed, some were posted as paper copies and others were emailed. All of the stories were summarised (analysed) by the Storywriters, either in their own voices, or in the voices of those that they had listened to. Storytellers were anonymised during the writing up process, by the Storywriter. The evaluation team then used thematic analysis to produce the summary of findings.

Chapter 8

Example of risk to researchers from our own practice – emotional impact of sensitive research

Research with vulnerable populations – reflections from practice

Homeless people

The topic of the research was about a health service being offered to homeless people who attended a homeless centre in a northern city in England. The researcher attended groups in the centre and physically there was no threat, participants having been invited via stakeholders and the interviews taking place in the centre, a safe space for participant and researcher alike.

During the work, the participants were not asked any personal questions about the circumstances causing their homelessness but the trust that was built up led many of them to share deeply personal information. They disclosed accounts of being victims of childhood sexual abuse, attempted suicides, severe mental illness, and the impact of disability on their lives.

The researcher was confident that the participants were receiving a high level of support at the homeless centre so did not need to report concerns for their wellbeing, but there was a degree of emotional impact for the researcher. It was the end of the university semester before Christmas so there were few people working and to guarantee the confidentiality of the work, it was inappropriate to discuss the issues that had arisen with people outside of the research team.

In future projects of this nature, the researcher will put a meeting in the diary with a colleague/the PI to debrief properly about the issues raised.

Chapter 10

Examples of participation and the resulting impact from our own work

Several research projects that we have conducted have involved participants being trained as peer researchers (see discussion of volunteer listeners in the web-site material for chapters 2 and 6), and a resulting impact is skills development for them, for example resulting from the training and then their subsequent involvement in data collection such as interviewing, and focus groups. In some instances community members receive training in research methods but then are unable to participate in any data collection for a variety of reasons, but in some of our experiences these community members report that they felt more confident as a result of being involved, even for a limited time period. The development of skills, confidence and employability amongst community members involved in the process is discussed within chapter 10 as an impact associated with involvement (Green et al 2000, Pain et al 2015).

In instances where we have co-produced evaluations working in participatory ways with stakeholders who are not professional researchers, we have been able to create outputs that have had practical outcomes. For example, Warwick-Booth, L. and Coan, S. (2016) The Key Evaluation Final Report January 2016 was used as evidence in a successful funding bid to secure money to deliver an amended intervention, 2017-2020.

The increased involvement of vulnerable, underserved and minority community members in the research process (Israel et al 2010) is also cited in the literature as an impact of participatory research. We have worked with several vulnerable community groups (with varying levels of participation from them in the research process), with the aim of giving them voice and documenting what works for them in service provision. See:

- Warwick-Booth, L. and Cross, R. (2020) ‘Changing Lives, Saving Lives: Women Centred Working – an evidence-based model from the UK’ Women’s Health & Urban Life: An International and Interdisciplinary Journal – accepted for publication May 2019.

- Warwick-Booth, L and Cross, R. (2018) ‘Journeys through a Gender Specific Intensive Intervention Programme: Disadvantaged Young Women’s Experiences within the Way Forward Project’ special issue on youth – Health Education Journal 77, 6, pp. 644 –655.

- Warwick-Booth, L., Woodward, J., O’Dwyer, L., Knight, M. and Di Martino, S. (2019) An Evaluation of Leeds CCG Vulnerable Populations Health Improvement Projects April 2019.

- Warwick-Booth, L. Woodward, J. and O’Dwyer, L. (2018) An Evaluation of the Leeds Gypsy and Traveller Health Improvement Project October 2018.